#52 - One KPI to Rule Them All - Part 2/3

How to Measure Adoption in Internal AI Product Work

#beyondAI

In Part 1, I made a claim:

Adoption is the one KPI that rules all others.

Not because it replaces business metrics, but because it is the only signal available early enough to matter. It tells you whether what you’ve built is being used. Because without usage, every other success metric stays out of reach.

This belief didn’t arrive all at once. It came from years of building internal AI products that technically worked, but quietly failed. And still, they were celebrated.

That never felt right.

How can we, as a team, sometimes as a department, celebrate something without knowing if it created real value for the people who were supposed to use it?

Was I wrong? Or is this simply how big companies define success?

Back when I owned a startup and worked in others, we never celebrated a launch just for launching. We celebrated because now we could finally test whether we had built something real. Something people wanted. Something people would pay for. A launch was never the end. It was the moment the real work began:

finding product-market fit,

solving real user problems,

and learning if our solution deserves to grow.

But when I entered the world of large enterprises, I noticed something different.

Delivery became the final goal.

“Brilliant, team. Let’s move on to the next project.”

And honestly, I became allergic to that mindset.

Because these weren’t real products. They were internal service deliveries. We built what someone up the chain had asked for, without taking responsibility for whether it actually worked. Without knowing whether it ever made a difference.

For the last ten years, I’ve been on a mission to shift that.

To establish more solid product thinking in internal AI work. To treat these solutions not as technical outputs, but as real products. Not as projects, but as investments in value creation.

And that shift always brings me back to the same question:

How can we truly know that what we’ve built is valuable?

How can we show, without guesswork or slide decks, that something meaningful is happening?

For me, the answer begins with user adoption.

Because in internal AI, we don’t have all the signals that external products offer. There’s no revenue line. No churn metric. No market traction to point to. We can’t always follow the money, but we can follow the behavior.

And over the years, I’ve learned this:

Adoption is the only KPI worth tracking first. It’s the beginning of everything else.

So in this second part of the series, let’s go deeper. Let’s unpack what adoption really means. Let’s look at how to measure it and let’s explore how it becomes your most practical tool, not just to prove value, but to improve your product, align your stakeholders, and earn the credibility to keep building.

Because if adoption is the signal that rules them all, we owe it to ourselves to treat it like one.

What Adoption Means for Internal AI Products

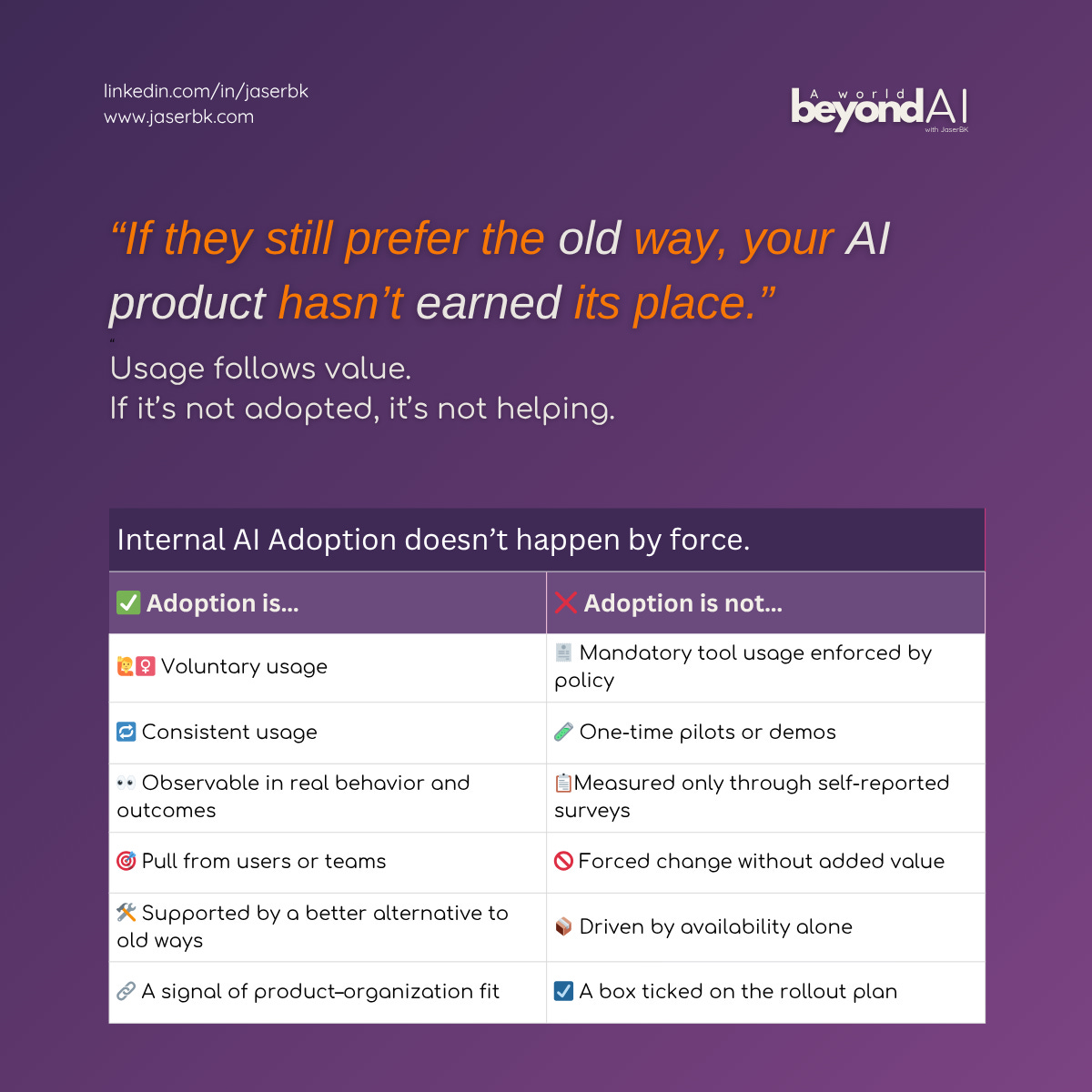

Adoption is a word we all use. But most of us mean different things when we say it. Sometimes we mean access. Sometimes usage. Sometimes trust. Sometimes, just awareness. In some dashboards, adoption is binary: “Is it being used or not?” In some project meetings, it’s reduced to a launch checklist: “Did we roll it out to users?” And sometimes, worst of all, it’s assumed to be automatic. As if deployment equals adoption.

It doesn’t.

In internal AI product work, adoption is rarely immediate. And it’s never guaranteed. Not even when the model is strong. Not even when the integration is clean. Not even when the business case is sound. Because the truth is this:

Adoption is not a feature of the product. It’s a behavior.

It lives outside the product team. It lives in the people you’re trying to help. In how they choose to work, what they’re trying to get done, and what alternatives they already trust. That’s why we need to be much more precise about what we mean by it.

To me, internal AI product adoption means this:

People are using your product voluntarily and consistently, for the purpose it was designed to support — and sometimes even beyond that.

Let’s unpack that. And begin with the most important one.

Voluntarily means it wasn’t forced. People chose it because it made sense. Because it helped. Because the alternative was worse, or simply more work.

In an enterprise setup, it’s common that an internal tool is introduced and employees are required to use it. Think CRM systems, where there is no alternative. Usage is enforced. Compliance is easy to measure. Adoption, in this case, becomes inevitable.

But for AI products, this rarely holds true.

By nature, AI products are designed to improve workflows, not replace them. They assist, guide, recommend, or automate. But rarely do they become the only way to get a task done. The outcome still matters more than the method. As long as the deliverable arrives, no one questions how it was achieved.

That’s what makes internal AI Product Adoption harder to earn.

Consistently means the behavior is stable. Not a spike. Not a one-time test. Not a pilot use case that disappears once the sponsor moves on.

Real adoption creates patterns. Patterns you can observe, learn from, and improve. And consistent usage is what matters most. Because at the end of the day, that is how you prove your product is trusted to do the job it was built for. Not once. But every time. It becomes the default. Not just an option.

So once you’ve built a product and taken real steps to drive adoption, reaching that point tells you something powerful. It means you’ve achieved alignment. Between what you built, what users actually need, and how they truly behave.

That’s why a technically “successful” product can still fail. Because

AI product adoption doesn’t happen when you push something into a process. It happens when the process pulls it in.

It’s not a rollout. It’s a fit. And guess what, we have it, too. The famous product-market fit. Only here, it has a different name. Product-organization fit.

The point where your AI product doesn’t just exist inside the company. It belongs.

And fit always emerges through comparison. Users try your product. They compare it, consciously or not, to whatever they were doing before.

Manual steps. Excel hacks. Asking colleagues. Ignoring the problem.

If your product helps more than it hurts, if it feels smoother, faster, safer, or more reliable, they come back. If it doesn’t, they don’t. Adoption happens when the alternative is worse.

And once that’s true, once you’ve reached the invisible tipping point where the user stops asking, “Should I use this?” and simply starts using it, that’s when the product becomes real.

Not because you said so. Because they did.

How to Track AI Adoption in a Company

While adoption rate is the KPI we care about most, it doesn’t appear on its own. It has to be constructed. Built from the bottom up. It’s a composite, derived from smaller, observable metrics that together signal whether adoption is really happening.

It only makes sense when you know who the product is for and whether the people you had in mind are actually using it. In other words: before you can calculate adoption, you need two things.

(1) A clear picture of your initial user base.

(2) A way to measure voluntary and consistent usage across that base.

Without these two, you can’t derive a meaningful adoption rate.

01 The Initial User Base

Know the People You Choose First

Adoption rate already tells you that you need a denominator.

And here’s where most teams accidentally sabotage their own success.

In internal AI product development, you almost always start with a specific user group. Either they approached you and asked for AI help, or you pitched an idea to them and got them onboard. This team becomes your initial user base. It might be sales, ops, or marketing from a particular department. But it could just as easily be a niche team from anywhere in the organization.

Your solution is meant to support their daily work. That’s the scope. And that’s the user base you should measure adoption against, at first.

It’s not only important to define who your initial users are, but also how many there are.

For example the company may have 100 salespeople in total.

But if your product is designed for, and piloted with, just 20 in the B2B segment, stick with 20 for now. Don’t get distracted by the bigger number.

The reason is twofold:

You can’t assume fit across the board.

Just because a product works for 20 users doesn’t mean it fits the workflows, needs, or systems of the other 80. Different regions, different setups, different behaviors. It’s a false assumption.You’ll dilute your metric.

If you count all 100 salespeople in your denominator while only 20 were ever involved, your adoption rate looks artificially low and you’ll find yourself explaining numbers that don’t reflect reality.

Let’s make this concrete.

You build an AI tool for the 20 people in your B2B sales team. After rollout, 18 of them use it consistently. That’s a 90% adoption rate. Strong signal. Great outcome.

But a few weeks later, someone tells you there’s another sales team with 80 people. You add them to your target base, even though they haven’t seen the tool yet. Now, your adoption rate drops to 18%.

Technically correct.

But totally misleading.

That’s why you need to define and track user groups separately. Only expand your base when it makes sense to. Otherwise, you confuse the narrative and weaken the story your metrics are telling.

02 Voluntary Usage

When People Choose Your Product

Voluntary means people are choosing your product without being forced to.

And in internal AI scenarios, that’s almost always the case. Unlike CRM systems or time-tracking tools, no one mandates the use of your AI assistant. People already have their own workarounds. Their spreadsheets. Their templates. Their muscle memory.

So if they do use your product, it’s a signal. It means:

✅ Your product makes their job easier.

✅ Your product is a better alternative.

✅ They’ve chosen it over what they had before.

That’s worth tracking.

Here’s one of the strongest indicators:

New users over time, beyond your original scope.

As described above, most internal AI products begin with a tightly scoped user group, often a single team who co-develop or pilot the idea. That’s your baseline.

But if the product has real value, you’ll begin to see interest from outside that circle.

Someone asks if they can try it. Someone else shows up in the logs. Suddenly, new names appear, without formal rollout.

That’s not just usage. That’s organic proof of value.

So here are two simple, telling metrics to track:

Unique users / usages per month

Unique users / usages per department per month

You don’t need perfection here. But if those numbers grow, especially from teams not part of your original user group, it’s a sign that voluntary adoption is unfolding.

And that matters.

Nobody voluntarily uses a product that makes their job harder.

03 Consistent Usage

When Your Product Becomes a Habit

Now let’s move to the deeper layer. If someone uses your product once, it hasn’t been adopted. Even if the demo was a hit and the pilot went well. Real adoption happens when your product becomes part of how work gets done.

That means behavior that’s repeatable. Predictable. Consistent.

But consistency is always contextual. Some products support daily tasks. Others support monthly processes. One AI product might be used 40 times a week. Another, once a quarter. Both can be adopted, if they match the rhythm of the task they support.

So before tracking anything, ask yourself:

What’s the expected usage frequency for this product?

Does the current behavior align with that expectation?

Are users coming back or was it a one-time test?

Once you’ve clarified that, these are the metrics worth watching:

Usage frequency per user

Repeat usage across relevant timeframes (weekly, monthly, quarterly)

These show whether your product is truly becoming embedded in how people work or if it’s just another tool they tried once and left behind.

These basic signals are already enough to give you a sense of adoption rate. If people are coming back, using it again and again, you’ve done something right.

04 Calculating Adoption Rate

Let’s try to bring this all together with a working example. We’ll use what we’ve learned to create a first draft of an adoption rate formula, based on an potentially real internal AI product: The Tender Assistant. Let’s see what adoption really looks like, when it’s measured with care.

This product is designed to support teams working on public tenders, automating data collection, identifying inconsistencies in documents, and helping prepare responses more efficiently. It wasn’t meant to replace a mandated process and not to be rolled out through a mandatory toolchain. It was just an enhancement, a GenAI use case meant to improve a high-effort workflow.

So, how do you track adoption here?

Step 1: Define the Initial User Base

We start with one department: the Strategic B2B Sales team, responsible for responding to complex tenders in a specific region.

There were 12 active users involved in the process, bid managers, solution architects, and legal reviewers. That became our first user group.

We won’t include other sales regions yet and not count adjacent teams. We focus on the 12. This gives us a clear denominator for early adoption tracking.

Step 2: Track Voluntary Usage

As mentioned before, no one is forced to use an internal AI product. That’s the nature of this work. We introduce the product. We support the onboarding. We train the core users. And then, we wait. But we don’t disappear.

We stay available and close. We answer questions, fix what’s broken, and listen to what’s missing. Because adoption doesn’t happen in a moment. It unfolds over time.

In the first few weeks, we start to see the signals.

11 out of 12 users accessed the Tender Assistant at least once.

9 out of 12 returned to it more than once.

6 out of 12 used it across at least three separate tender processes.

That already tells us something: people are choosing the product, not just trying it, but using it when it counts.

But then came the stronger sign:

In the second month, the Large Deals unit - a completely separate team - reached out and asked for access. They had heard about the tool and wanted to see if it could help them, too. We hadn’t pitched it. They had asked for it. That’s not just growth. That’s pull.

That’s adoption trying to spread.

Still, we treat them differently and carefully.

We don’t add them to the original adoption rate formula just yet. We start a new onboarding journey, map their specific workflows, and learn what overlaps and what doesn’t. Some of the features work. Some don’t. Their usage is still low, but it’s early and more importantly, we’re tracking them in a separate bucket.

Step 3: Track Consistent Usage

We look at usage frequency aligned to the actual rhythm of tenders. This team responded to 2–3 tenders per month, typically with a 1-2 week turnaround. So we expected the tool to be used at least once per tender.

What we saw:

7 out of 12 users used the tool during all tenders in the second month.

The average usage per user per month rose from 1.3 to 2.7.

Usage logs showed task-specific prompts being reused - a good sign of habit formation.

This confirmed that it wasn’t just being tested. It was becoming part of the process.

Step 4: Build the Adoption Rate Formula

We kept it simple to start:

Adoption Rate = (Number of consistent, voluntary users in initial group) / (Total number of users in initial group)

In this case:

7 users showed consistent usage (aligned with tender frequency)

12 users in initial group

→ Adoption Rate = 58%

But that number doesn’t stand alone. We tracked growth as well.

After onboarding the Large Deals team (10 new users), we tracked their behavior separately for a month before merging them into the broader adoption metric. This kept our insights clean and our stories honest.

So far, we’ve talked about how to measure adoption rate. And in this case, we focused on signals from a GenAI use case - the Tender Assistant.

These metrics are foundational. But depending on the nature of your AI product, they might not be enough. Not every AI solution looks the same. Some are workflow assistants. Others are quiet automation. Some surface insights. Others shape decisions.

And depending on how your product is used - and by whom - different signals will matter. What counts as "real usage" for one AI product might be irrelevant for another.

That’s why we need to expand our view.

And yes - even though I said this would just be a two-part series - there’s more to unpack.

In the next article(s), we’ll explore:

Different AI Products, Different Signals

How to Use Adoption to Drive Product Decisions

Using Adoption in Stakeholder Conversations

Adoption and Long-Term Funding

Hope to see you then.

JBK 🕊️