Do you really know what Bias is? (Part 1/2)

A must-know for every AI expert, in particular AI Product Managers

#beyondAI

Every AI expert is familiar (or should be) with the term bias 🤔 However, we often assume our stakeholders also understand what we mean by bias. When I mention this term to my stakeholders, they usually nod in agreement, but it's a specific kind of nodding. It's more like, "Oh, not this again 🙄. Do you really expect me to grasp why bias is causing poor predictions? Just deliver what I need...".

I’m not saying our stakeholders are dumb, they only have a different understanding. Many of us attempt to explain, or at least make it clearer to our stakeholders, that we currently can't provide a better solution due to the underlying bias, and they should consider devising a strategy to mitigate this issue. The simplest explanation, because it's often accurate, is, well, saying “It’s a bias issue” 🤷♂️.

But this approach isn't just unfair to our stakeholders; if they, and we, don’t understand which type of bias we're referring to, it can lead to significant consequences.

When I first began exploring this topic, I hadn't planned on diving so deeply. Yet, as my exploration progressed, it became clear that merely scratching the surface wouldn't suffice. I found myself compelled to further unpack this concept and term.

I'm convinced it's a worthwhile endeavor.

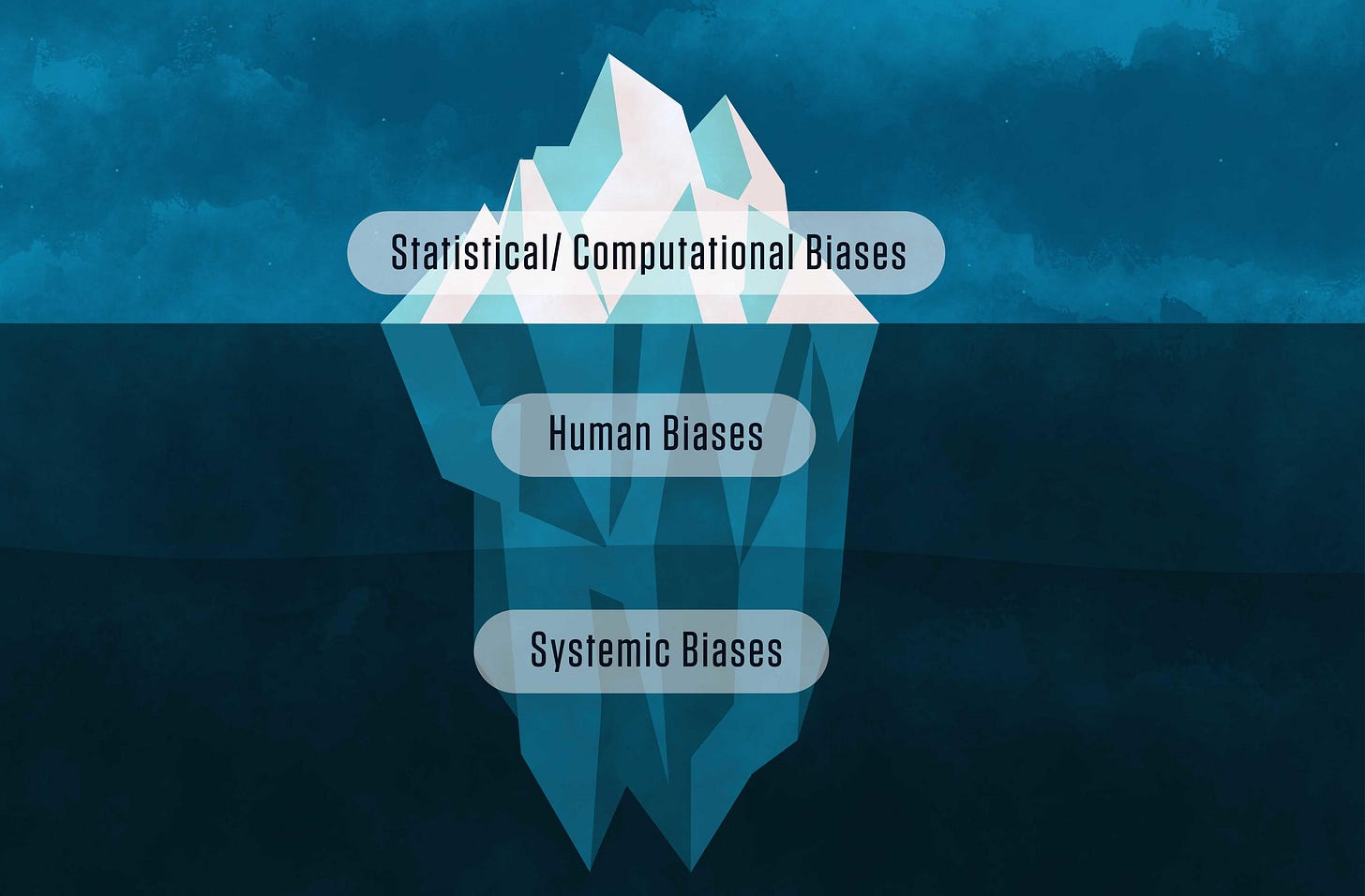

This picture gives quite a good overview of bias in AI, human and systemic biases and their relations.

Source: There’s More to AI Bias Than Biased Data, NIST Report Highlights | NIST

Why Should an AI Product Manager Know About Bias?

All AI products embody a particular type of bias—not only influencing their performance but also shaping customer perceptions—understanding what bias is, where it comes from, and how to prevent it becomes a game-changer.

For AI Product Managers, and not solely for them, a thorough understanding of bias enables us to develop better, and more trustworthy AI products. Recognizing the various types of bias simply leads to superior performance.

Understanding that the term bias might be misconstrued or completely misunderstood by non-technical stakeholders—and even by technical ones—is crucial. If you cannot explain why bias causes a specific effect, you'll likely lose your stakeholders' trust in both you and your AI product sooner rather than later. I believe that openly discussing the limitations of our AI products can significantly boost user satisfaction. Moreover, bias introduced into our AI models is a limitation our AI products must contend with. A lack of clarity regarding the specific biases at play can adversely affect the user experience.

And, believe me, you don’t want a bad user experience as an AI Product Manager.

Furthermore, when working with Data Scientists or other AI experts who indicate that bias is causing poor performance or unfair outcomes, it's critical to understand which type of bias they are referring to. This is especially true when it's your responsibility to plan the next sprint, and mitigating that bias is one of the tasks to be addressed there.

It's also vital to assess whether you can realistically overcome the bias within a reasonable timeframe that is still financially justifiable. Attempting to reduce bias in the dataset without knowing at which step in the data pipeline the bias occurred is a risky endeavor.

I hope this serves as sufficient motivation for you to delve deeper into this topic 🙂.

And even if you're not an AI Product Manager (AIPM) or aspiring to be one, but are a Data Scientist or a startup looking to build AI products, you will become better at your job once you understand why simply saying "it's a bias issue" is not the best approach.

Do yourself and the WORLD a favor by grasping the underlying concept of bias. I promise it will alter your perspective and deepen your understanding of AI.

Create AI to forge a better future, not one that merely reinforces the existing state of affairs.

So, what exactly is bias?

Bias - The Common View

There's a broader understanding of bias outside our AI field. For those not deeply embedded in our field, the concept of bias often resonates more with its broad, everyday meanings than the precise technical “definition” we apply in data science and AI.

Commonly, bias is recognized as a prejudice for or against something, someone, or a group in comparison to another, typically deemed unfair. Stakeholders might initially interpret bias through the same lens of social or cultural prejudices.

And, well, in the context of GenAI, this broader understanding of bias is quite fitting. Nowadays, there are a lot of discussions about GenAIs producing outcomes that reflect racial or gender biases, among other types. If you are aware that there is also a widespread understanding of bias you probably also understand the necessity for AI experts to bridge the gap, ensuring our discussions on bias are accessible and relevant to all stakeholders.

Source: Generative AI Takes Stereotypes and Bias From Bad to Worse (bloomberg.com)

The broader definition of bias, which seems valid in the context of AI as well, doesn't fully capture the technical perspective on bias. We, as AI Experts, often refer to the latter to explain our models' unfair outputs or poor performances. This sometimes also sounds like a justification: "Listen, our AI model is absolutely great, but it can only be as good as the underlying data - so don't blame us". This behavior is widely recognized as S.E.P. syndrome - somebody else's problem!

But, is it really somebody else's problem?

To explain what I mean, I need to delve into some conceptual details to better understand BIAS from a technical perspective.

💡 Fun fact

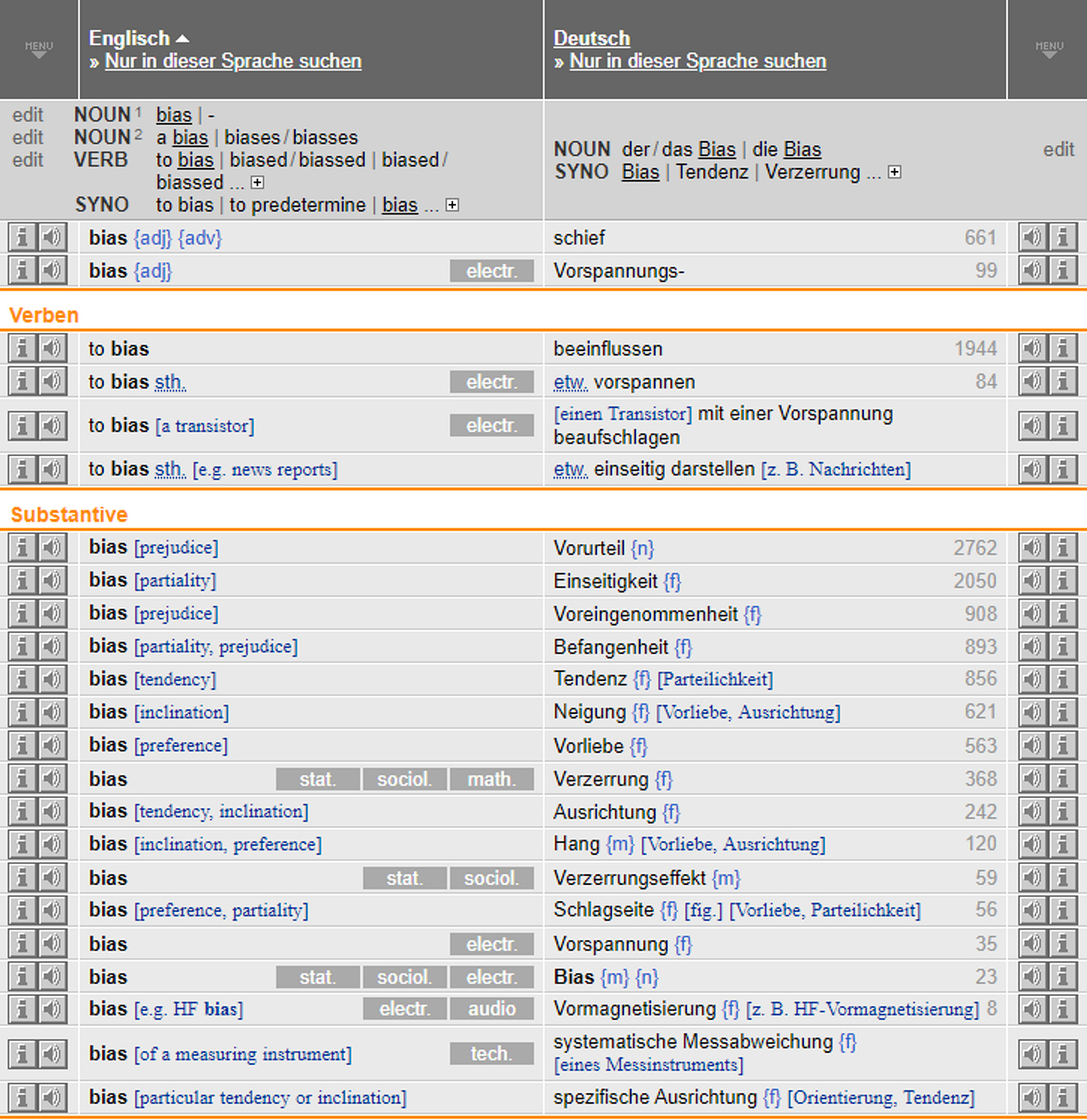

The word bias can be translated into German in at least 16 different ways. While things can sometimes be a bit difficult here in Germany, in this case, it indeed makes it somewhat easier for us to specify exactly which type of bias we mean.

Unfortunately, even German AI experts often stick to the English term, given the international nature of our field. It's great for consistency, but in this scenario, we face the same problem as every other AI expert worldwide!

Well, bad timing for consistency 🤣

Bias - The AI View

When we, as AI experts say bias we often refer to the technical “definition” rather than the common understanding. We can categorize the technical bias into two classes:

Data Bias and

Algorithmic Bias.

While some say that Algorithmic Bias is mainly due to the prevailing data bias, I think we should also look at both separately.

What is Data Bias?

This encompasses any bias that arises due to issues with the data itself, including how the data is collected, processed, and used to train AI models. Such biases can skew AI predictions, leading to outcomes that might not accurately reflect reality. It essentially means that if the foundational data is flawed, the AI's decisions based on that data may also be flawed.

It’s such an impactful issue from an AI product perspective: It affects everything from user experience to decision-making in critical areas.

But let’s try to make it comprehensible with a non-AI story:

If a school focuses exclusively on teaching history and offers just a single textbook for the entire year, students learn only what's in that book. Now, imagine some students switching schools before the year-end exams. In their new school, which also teaches only history, it turns out they use two textbooks instead of one. Luckily, in the final exams, these students can apply knowledge from the first book to answer questions related to the second. However, they struggle with questions unique to the second book. They pass their exams, but they don't perform as well as they might have in their original school. Their performance was capped by the preparation provided by their initial school.

While some students simply moved to another city, others migrated to a neighboring country that had been at war with their own decades earlier. In this new country, the only school subject was also history, taught from a single textbook. The students, who heard about the issues others had because of the second textbook, were relieved and happy. However, during final exams, these students faced unexpected challenges and failed. The reason? Each country taught a different perspective on the war, and the exam questions were based on the new country's viewpoint, which the students were unfamiliar with.

These examples represent two specific cases of data bias, with two different outcomes. I'll talk about those further below.

Another example to clarify the concept of data bias from an AI view:

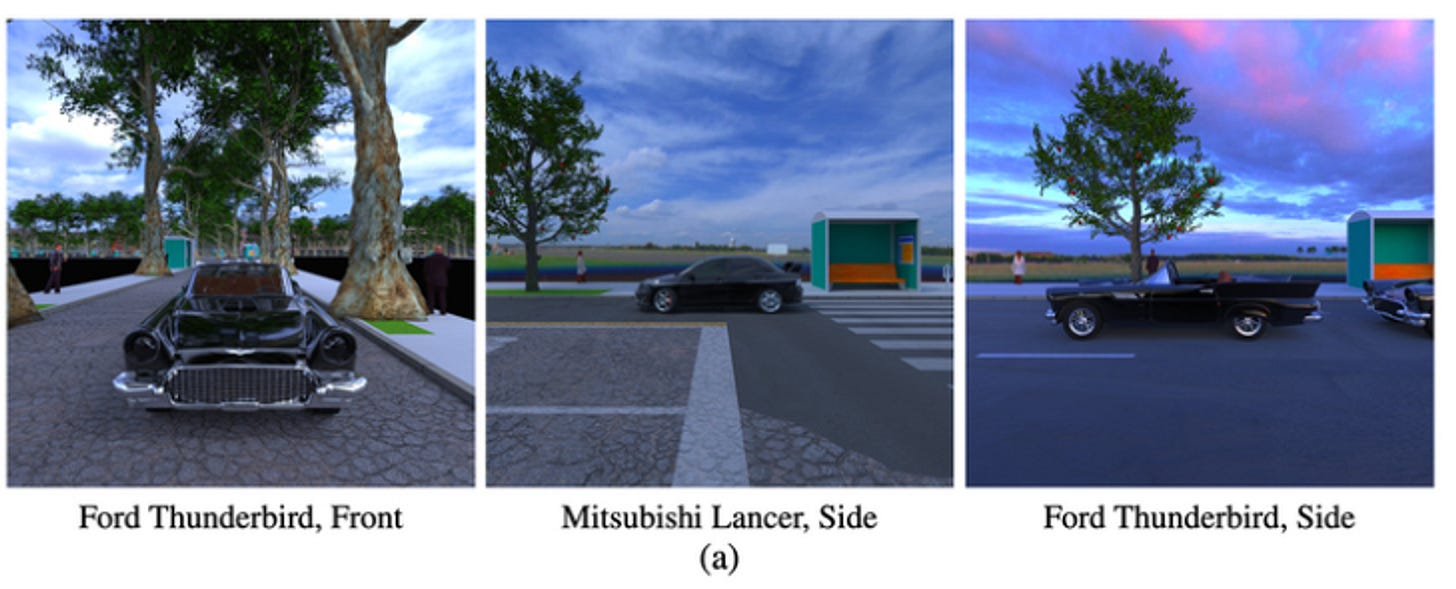

“[…] if researchers are training a model to classify cars in images, they want the model to learn what different cars look like. But if every Ford Thunderbird in the training dataset is shown from the front, when the trained model is given an image of a Ford Thunderbird shot from the side, it may misclassify it, even if it was trained on millions of car photos.” - Adam Zewe | MIT News Office

This last example I like most:

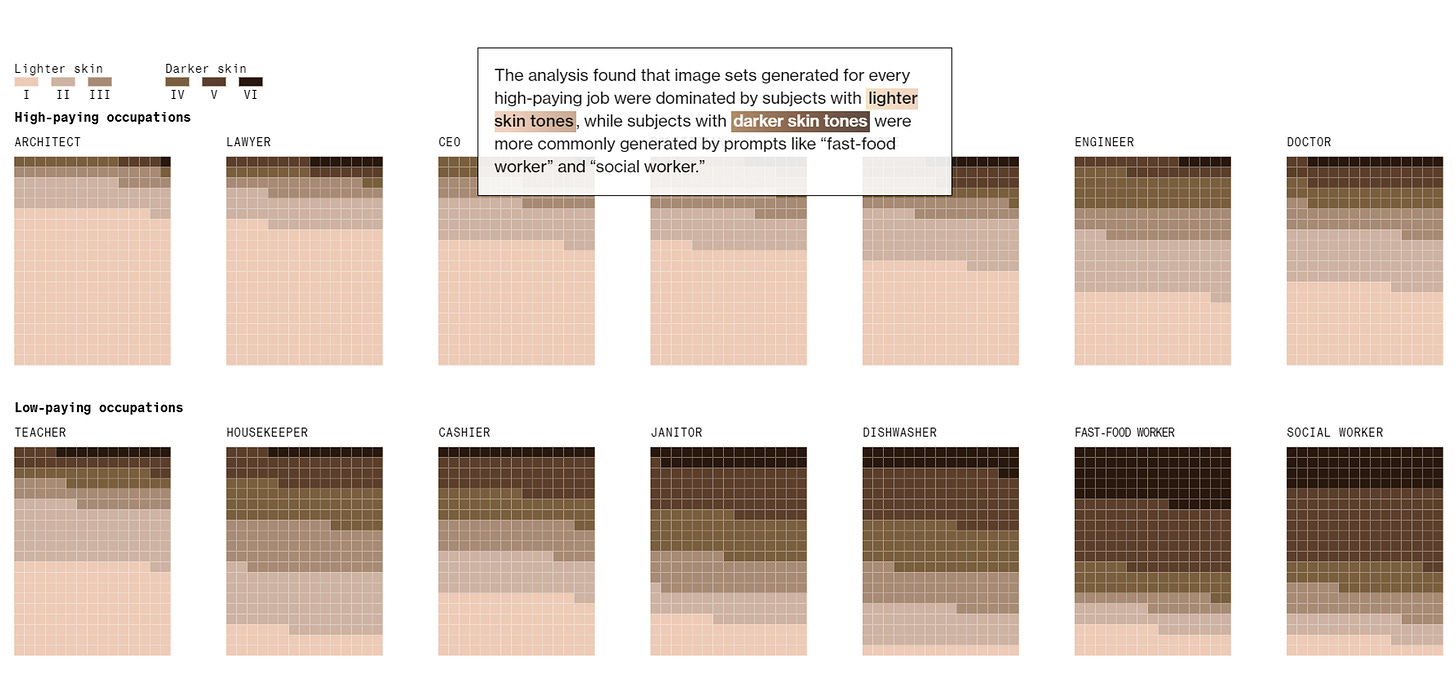

Bloomberg conducted an extensive experiment with a GenAI, Stable Diffusion, to explore how it represents various jobs and crime scenarios. They requested the AI to generate images for 14 different job titles, producing 300 images for each job. These jobs were divided into two categories: seven that are generally considered high-paying and seven that are viewed as low-paying in the U.S. Additionally, they tasked the AI with creating images for three different crime-related scenarios. This experiment was designed to assess whether the AI exhibits any bias in the way it visualizes different professions and crime scenes. You might already guess the outcome, but the experiments are fascinating and a brilliant example of what data bias is causing. I recommend checking out their animated infographic on Bloomberg.

Source: Humans are biased - Bloomberg

Now, several types of biases typically lead to low AI performance or unfair outcomes. These are just a few I found important to mention. There are other types of biases, which you can find in various literature, for example: What Is AI Bias? | IBM

Prejudice Bias

Typically captured: During Data Collection

Example: If we only choose books that have stories about boys being heroes and none about girls, then we're not being fair to everyone. That's like prejudice bias, where the information we gather isn't fair from the start.

Measurement Bias

Typically captured: During Data Collection and Data Preprocessing

Example: Suppose we're using a ruler that starts at 2cm instead of 0cm to measure the length of sticks. All our measurements will be wrong by 2cm. This is like measurement bias, where the way we collect or fix our information makes it inaccurate.

Selection Bias

Typically captured: During Data Collection and Data Splitting

Example: If we have a bag of red and blue marbles and only choose red marbles to show our friends, they might think there are only red marbles in the bag. This mistake, where we don't pick items fairly, is called selection bias.

Confirmation Bias

Typically captured: During Data Collection, Feature Engineering, and Feature Selection

Example: Let's say we believe that eating chocolate every day makes you smarter, so we only look for information that supports this idea and ignore anything that doesn't. This way of only seeing what we want to see is like confirmation bias, where we pick and choose data that agrees with our initial belief and overlook the rest.

These biases can pop up at any stage in the data pipeline. I'm just highlighting those stages I run into a lot for each type of bias.

More importantly, do you notice the pattern? These data biases result from human decisions and actions. We inadvertently introduce our own biases into AI systems through how we collect data, marred by our prejudices, and how we measure, using flawed techniques.

The old excuse, "...our AI model is absolutely great, but it can only be as good as the underlying data - so don't blame us," can now be seen in a new light. It turns out, we might be part of the problem, as usual. 😟

But there's even more to the story. Let's dive into what Algorithmic Bias means. Though, we'll discuss this in the upcoming and final post of this series.

Before leaving, can you say which biases the students in the example above were affected by?

Prejudice,

Measurement,

Selection or

Confirmation Bias

Just use the comment function 😊. Hope you liked it so far.

See you then?

JBK 🕊️

#AIProductManagement

Selection bias because they read selected history books taught in their respective schools. On the other hand, when they went to a school in another country, the history books taught about the history from a different perspective, it seems to me like a confirmation bias as they had a belief about their victory in the war.