Learn Why You Should Deep Dive into LLMs as an AIPM... 🤿

...and then let's deep dive together

#beyondAI

As an AI Product Manager transitioning from my earlier role as a Data Scientist, I’ve often felt a bit out of sync with the latest advancements in AI. Not in terms of being unaware of new developments, but more in lacking a deep, technical understanding of how these new technologies work in detail.

But I’ve come to terms with this.

I realized that as an AIPM, it’s not always necessary to be up-to-date on every technical nuance. What’s more important is knowing when to dive deeper into newly emerged technologies. For me, the right moment is when I need to apply a specific modeling approach.

And that moment is now.

There’s an ongoing debate within the AIPM community about how much of the inner workings of a technology an AIPM should understand. Some argue you need to be deeply knowledgeable, others believe it's more crucial to know how to effectively use AI, and some suggest you should be just knowledgeable enough to be “dangerous” to your data scientists and AI engineers. While I don’t advocate for creating a “dangerous” dynamic with your team, I understand the intent behind it.

In my experience, it’s always been incredibly helpful to have a solid understanding of the technology to engage in meaningful discussions with my data scientists and engineers. When they introduce concepts I’m unfamiliar with, my strong foundational knowledge allows me to grasp the idea quickly. This is when I perform at my best. Of course, I’m never as literate as the experts on my team, and that’s okay! They are immersed in the technology daily—that’s their job. So, it’s a trade-off when transitioning into an AI Product Manager role.

However, I’ll also admit that when starting a new AI product with an unfamiliar modeling approach, I’m not always sufficiently literate from the start. But with each passing week, I grow stronger. Typically, after 2-3 months, I reach a level where I’m satisfied with my understanding. One learning strategy that works well for me—though not the most time-efficient—is writing about what I’m learning.

Anyway, as AIPMs, we always have to remember that our main focus isn’t on development and modeling. We have to maintain a bird’s-eye view of the entire AI product lifecycle. That’s why it’s also crucial to prioritize learning in a way that maximizes impact at the right time, without losing sight of the bigger picture or spending too much time on something that’s not immediately useful, whether technology or methodology-wise.

The field of GenAI, particularly with LLMs, can be overwhelming for AI Product Managers when it comes to deciding what to learn first. I’ve experienced this myself recently. As you might have seen in my article “I'm Back in the Natural Language Processing Game” or through my LinkedIn posts, I’m now fully immersed in the GenAI world. To perform at my best as a GenAI PM, I’ve delved deeply into understanding how LLMs work. And yes, it’s been quite time-consuming, even with my NLP background from university.

However, my approach to learning typically aligns with my preference to understand things from first principles. I like to grasp the core ideas in detail before piecing everything together. Admittedly, this isn’t the most time-efficient method for an AI Product Manager, but it works for me and has proven to be quite effective.

This approach also allows me to develop a plan for my future self (in case I forget some details) and for you as well—to learn LLMs more efficiently and effectively as an AI Product Manager.

The Cost of Non-Literate AI Product Managers

As I build AI products for a telecommunications company, it’s crucial that I first determine whether a problem my colleagues have is truly worth solving—and more importantly, in the context of this article, whether it can be translated into a viable AI use case.

I want to emphasize the if here. Often, business colleagues approach me with a rough idea, asking if we could create an “AI” solution to address their problem. Before diving deeper to assess if the problem is real and worth addressing, my first step is always to determine if they’ve contacted the right team. Frequently, I discover that we could achieve the desired outcome with a simple automation script that runs on a schedule and performs a specific, deterministic task.

No AI needed. Zero.

But I could build it with AI—just for the sake of using AI. And because I am an AI Product Manager 😉

However, doing so can quickly become very expensive. As a Product Manager, you own the Problem Discovery Phase, and you have the support of many highly skilled (and costly) experts. Aligning a meeting to discuss a problem with the intention of using AI, only to find out after 1-2 hours that AI isn’t the appropriate solution, is, simply put, wasted resources. In a capitalist environment, you can imagine that this isn’t the most comfortable situation to be in.

But it’s not enough to merely determine if a problem could be an AI use case. You also need a mental model that guides you to the right AI model—one capable of solving the problem efficiently and effectively. Additionally, it’s important to understand who should be involved in alignment meetings to ensure the right expertise is on hand.

These are just some scenarios that illustrate why it’s crucial to have a deeper understanding of AI. There are many other situations where being sufficiently literate proves invaluable. But perhaps you should take a look at my article “Inside my typical week as an AI Product Manager” for more insights.

Now that you understand why I prefer to dive deeper than just the surface, and when I start learning new stuff, let’s get started and learn about bit more about LLMs.

Happy learning! 🛋️

What LLMs can do

For any AIPM dedicated to building LLM solutions, it’s absolutely essential to understand what LLMs can do.

Start here. Nowhere else. Learn the basics first!

And I also mean the basics of the original idea of LLMs, because modern LLMs (e.g. GPT-4o) can do far more.

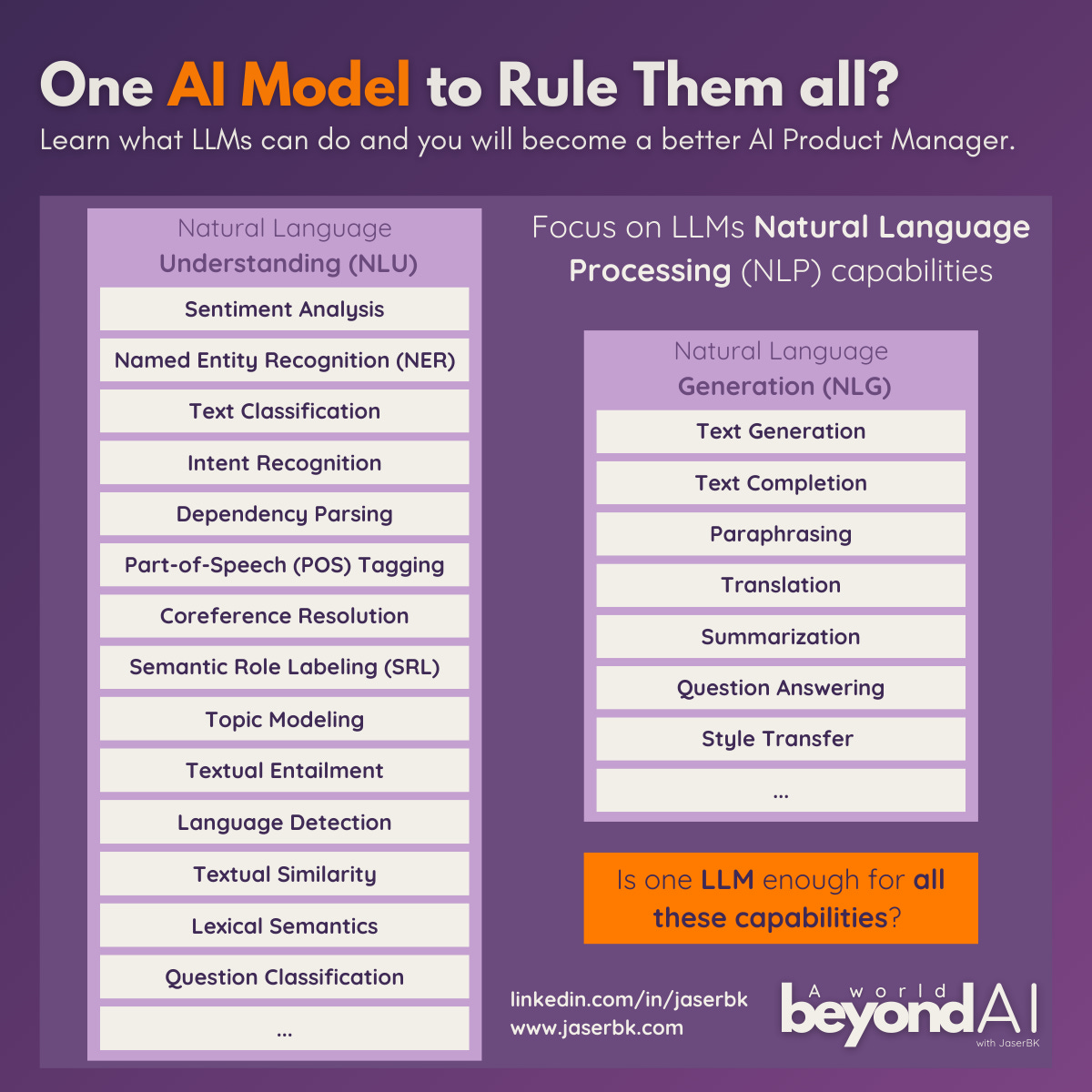

The core idea of LLMs was to provide an incredibly powerful model for processing natural language, commonly known as Natural Language Processing (NLP). And as all NLP experts know—and now you do too—NLP primarily consists of two categories: Natural Language Understanding (NLU) and Natural Language Generation (NLG).

Natural Language Understanding (NLU) is about enabling machines to comprehend and interpret human language. It involves breaking down the complexities of language—like context, sentiment, and intent—so that a model can grasp what’s being communicated. NLU allows AI to make sense of the input it receives, transforming raw text into structured data that can be analyzed or acted upon. It’s about understanding the “what” behind the words. There’s an ongoing and understandable, sometimes philosophical, debate about whether LLMs truly “understand” language or if they’re merely mimicking understanding. I won’t dive into that debate here, but if you're interested, here’s a great paperon the subject.

Natural Language Generation (NLG), on the other hand, focuses on the output. It’s about enabling machines to generate human-like language based on data or structured input. NLG powers the creation of coherent and contextually appropriate responses, summaries, or even entire articles. This is the aspect that often impresses people the most, and in the context of LLMs, it has even led to some controversy—such as when a Google software engineer was fired for his beliefs about the capabilities of these models. The ability of LLMs to produce texts that feel so human captures the imagination and sometimes sparks debate about their sentience. But at the end of the day, it’s all about probability: “What is the next best word (token) to be shown in a sequence of words?”

Just by understanding which problems fall into specific NLP categories, you can effectively plan your next steps. Let's take a closer look at the main tasks in both categories and evaluate whether LLMs can handle these tasks.

One Model to Rule Them All?

LLMs like GPT-4 represent a significant shift away from narrow AI. While they are not AGIs (Artificial General Intelligences), LLMs are far more versatile than traditional narrow models. They can perform a wide range of tasks—such as translation, summarization, question-answering, and more—without the need for separate, specialized models for each task. This versatility makes them broader in scope than traditional narrow AI models. Additionally, it’s important to recognize that LLMs are evolving beyond just solving NLP tasks, including vision and multimodal tasks.

But for now, let’s examine how well they can perform typical NLP tasks.

📙 Important points to note: in the past, each NLP task typically required a specific narrow model. If you're curious about a particular task, you can Google it or ask GPT for the narrow model that was used; this can be particularly useful if you need to understand them in depth. After all, not every NLP task requires—or should even be tackled by—an LLM. But, more about that in another article.

Narrow models are designed to perform specific tasks or solve particular problems. They are highly specialized, excelling in one area but lacking the versatility to perform beyond their designated function. For example, a narrow model might be exceptionally good at sentiment analysis or text translation but wouldn't be able to handle both tasks efficiently without significant re-training or re-design.Natural Language Understanding (NLU) Tasks

Sentiment Analysis Determining the sentiment (positive, negative, neutral) expressed in a piece of text.

Can LLMs Perform This? Yes, LLMs can effectively perform sentiment analysis by understanding the context and sentiment in text.

Named Entity Recognition (NER) Identifying and classifying named entities (like people, organizations, locations) in text.

Can LLMs Perform This? Yes, LLMs are capable of recognizing and classifying named entities with high accuracy.

Text Classification Assigning predefined categories or labels to text based on its content.

Can LLMs Perform This? Yes, LLMs can perform text classification, often with better accuracy and flexibility than traditional models.

Intent Recognition Understanding the intent behind a user’s input, often used in conversational AI.

Can LLMs Perform This? Yes, LLMs can recognize intents by understanding the context and nuances in user inputs.

Dependency Parsing Analyzing the grammatical structure of a sentence, identifying relationships between words.

Can LLMs Perform This? Yes, LLMs can perform dependency parsing as part of their broader language understanding capabilities.

Part-of-Speech (POS) Tagging Identifying the grammatical categories (e.g., nouns, verbs, adjectives) of words in a sentence.

Can LLMs Perform This? Yes, LLMs can perform POS tagging accurately as part of their text processing abilities.

Coreference Resolution Determining when different words refer to the same entity in a text (e.g., “Alice” and “she”).

Can LLMs Perform This? Yes, LLMs can resolve coreferences by understanding the context and relationships between words.

Semantic Role Labeling (SRL) Identifying the roles that words play in a sentence, such as who did what to whom.

Can LLMs Perform This? Yes, LLMs can understand and label semantic roles by comprehending sentence structures.

Topic Modeling Discovering the abstract topics that occur in a collection of documents.

Can LLMs Perform This? Yes, LLMs can perform topic modeling, often more dynamically by identifying themes across texts

Textual Entailment Determining if a piece of text logically follows from another text.

Can LLMs Perform This? Yes, LLMs can perform textual entailment by understanding the logical relationships between sentences.

Language Detection Identifying the language in which a given piece of text is written.

Can LLMs Perform This? Yes, LLMs can detect languages with high accuracy.

Textual Similarity Measuring how similar two pieces of text are to each other.

Can LLMs Perform This? Yes, LLMs can assess textual similarity by comparing embeddings or overall context.

Lexical Semantics Understanding the meaning of words and their relationships (e.g., synonyms, antonyms).

Can LLMs Perform This? Yes, LLMs can understand lexical semantics and provide related terms based on context.

Question Classification Categorizing questions based on their type or expected answer (e.g., “Who,” “What,” “Where”).

Can LLMs Perform This? Yes, LLMs can classify questions and generate appropriate responses.

Natural Language Generation (NLG) Tasks

Text Summarization Producing a concise summary of a longer piece of text.

Can LLMs Perform This? Yes, LLMs can generate both extractive and abstractive summaries of text.

Machine Translation Translating text from one language to another.

Can LLMs Perform This? Yes, LLMs can perform machine translation across a wide range of languages.

Question Answering Generating answers to questions based on a given text or dataset.

Can LLMs Perform This? Yes, LLMs can answer questions based on their understanding of context and data.

Text Generation Creating human-like text based on a given prompt or set of inputs.

Can LLMs Perform This? Yes, LLMs excel at generating coherent and contextually appropriate text.

Dialogue Generation Producing natural and contextually appropriate responses in a conversation.

Can LLMs Perform This? Yes, LLMs can generate dialogue that is coherent and contextually relevant.

Paraphrase Generation Rewriting a piece of text in different words while retaining the original meaning.

Can LLMs Perform This? Yes, LLMs can effectively generate paraphrases by rephrasing content while preserving meaning.

Style Transfer Rewriting text in a different style (e.g., changing formal text to informal).

Can LLMs Perform This? Yes, LLMs can perform style transfer by adjusting the tone and style of text.

Final Thoughts

It really does seem that all classical NLP tasks can now be handled by a single model—a large one, indeed, but still a single, unified model. Each of these capabilities can individually serve as the solution to specific problems your colleagues or customers might face. However, some key questions remain:

Should LLMs always be preferred over specialized narrow models?

And what is the role of the training datasets for these LLMs?

Can all LLMs perform better on every task?

Even though LLMs are incredibly versatile, they aren't always superior to specialized narrow models. In certain tasks, narrow models can outperform LLMs due to their optimization for specific tasks, offering faster inference times and lower costs. This nuanced comparison between LLMs and narrow models could indeed be worth exploring in an article of its own.

Additionally, the quality and diversity of the training datasets used to develop these models play a crucial role in their performance. LLMs trained on vast and varied datasets may excel in generalization, but they might not always outperform specialized models trained on domain-specific data. This is also worth exploring from an AI Product Manager’s perspective in a separate article—potentially alongside post-training techniques like standard prompting, Retrieval-Augmented Generation (RAGs), supervised/unsupervised fine-tuning, instruction tuning, and Reinforcement Learning from Human Feedback (RLHF). Let’s see.

Finally, not all LLMs are created equal. The performance of an LLM can vary significantly depending on its architecture, size, and specific training regimen. Exploring how these factors influence their effectiveness across different tasks could reveal whether one model truly can "rule them all," or if a more tailored approach is sometimes necessary.

These questions open up a rich field of discussion and spark ideas for future articles.

But I’ll definitely delve into some of these LLMs' NLP capabilities and try to map them to real-world problems that your colleagues or customers might encounter. It will essentially be an AI Use Case Ideation session focused on NLP capabilities.

Sounds good?

I look forward to seeing you again.

JBK 🕊️

P.S. If you’ve found my posts valuable, consider supporting my work. You can help by sharing, liking, and commenting here or on my LinkedIn posts. This helps me reach more people on this journey, and your feedback is invaluable for improving the content. Thank you for being part of this community ❤️.

This is super timely to some discussions I’m having with our operations group around scoring calls. Thanks for the write-up!